head(state)EDA- Visualization

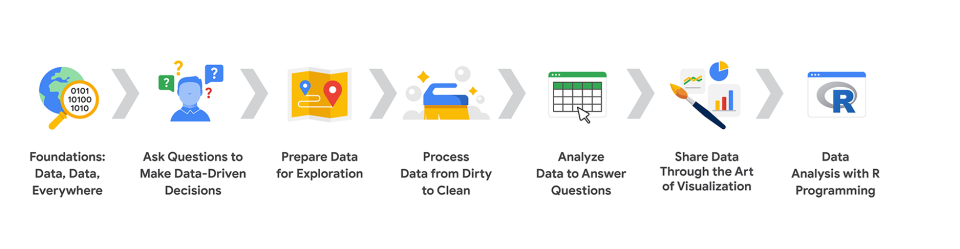

In Data Analysis Roadmap we learned that Data analysis is the collection, transformation, and organization of data in order to draw conclusions, make predictions, and drive informed decision-making. The steps needed to perform such a task were summarized in

We later learned that Data Analysis consists of 5 types:

- Descriptive

- Exploratory

- Inferential

- Predictive

- Causal

- Mechanistic

This chapter is dedicated to Exploratory Data Analysis (EDA)

The goal of exploratory analysis is to examine or explore the data and find relationships that weren’t previously known. Exploratory analyses explore how different measures might be related to each other but do not confirm that relationship as causative.

Definition

EDA is the use of visualization and transformation to explore data in a systematic way, a task that statisticians call exploratory data analysis, or EDA for short. EDA is an iterative cycle. You:

Generate questions about your data.

Search for answers by visualizing, transforming, and modelling your data.

Use what you learn to refine your questions and/or generate new questions.

EDA is not a formal process with a strict set of rules. More than anything, EDA is a state of mind. During the initial phases of EDA feel free to investigate every idea that occurs to you. Some of these ideas will pan out, and some will be dead ends. As your exploration continues, you will home in on a few particularly productive insights that you’ll eventually write up and communicate to others.

EDA is an important part of any data analysis, even if the primary research questions are handed to you on a platter, because you always need to investigate the quality of your data. Data cleaning is just one application of EDA: you ask questions about whether your data meets your expectations or not. To do data cleaning, you’ll need to deploy all the tools of EDA: visualization, transformation, and modelling.

Exploratory techniques are typically applied before formal modeling commences and can help inform the development of more complex statistical models. Exploratory techniques are also important for eliminating or sharpening potential hypotheses about the world that can be addressed by the data.

Before we get started let’s think of EDA as having six principles.

Principles

Comparison

Show a comparison. Compared to what is always valuable, if we say my dog is bigger! Bigger compared to what? Bigger than what? How do we get a true perspective if we don’t relate the comparison to a base?

Causality

Show causality or a mechanism of how your theory of the data works

Multivariate

Use more than two variables. If you restrict yourself to two variables you’ll be misled and draw incorrect conclusions

Evidence

Integrate evidence, don’t limit yourself to one form of expression

Document

Describe and document the evidence, documentation is key to any analysis, being data, financial, business or any for that matter. If a neutral party cannot read your documentation and is able to replicate your findings you’ve just wasted a whole lot a time.

Content

Analytical presentations ultimately stand or fall depending on the quality, relevance, and integrity of their content.

Questions

“There are no routine statistical questions, only questionable statistical routines.” — Sir David Cox

“Far better an approximate answer to the right question, which is often vague, than an exact answer to the wrong question, which can always be made precise.” — John Tukey

Your goal during EDA is to develop an understanding of your data. The easiest way to do this is to use questions as tools to guide your investigation. When you ask a question, the question focuses your attention on a specific part of your dataset and helps you decide which graphs, models, or transformations to make.

EDA is fundamentally a creative process. And like most creative processes, the key to asking quality questions is to generate a large quantity of questions. It is difficult to ask revealing questions at the start of your analysis because you do not know what insights can be gleaned from your dataset. On the other hand, each new question that you ask will expose you to a new aspect of your data and increase your chance of making a discovery.

There is no rule about which questions you should ask to guide your research. However, two types of questions will always be useful for making discoveries within your data. You can loosely word these questions as:

What type of variation occurs within my variables?

What type of covariation occurs between my variables?

The rest of this page will look at these two questions. We’ll explain what variation and covariation are, and we’ll show you several ways to answer each question.

Variation

Variation is the tendency of the values of a variable to change from measurement to measurement. You can see variation easily in real life; if you measure any continuous variable twice, you will get two different results.

This is true even if you measure quantities that are constant, like the speed of light. Each of your measurements will include a small amount of error that varies from measurement to measurement. Variables can also vary if you measure across different subjects (e.g., the eye colors of different people) or at different times (e.g., the energy levels of an electron at different moments). Every variable has its own pattern of variation, which can reveal interesting information about how that it varies between measurements on the same observation as well as across observations. The best way to understand that pattern is to visualize the distribution of the variable’s values.

Typical values

As it sounds, these are your basic typical values:

- Which values are the most common? Why?

- Which values are rare? Why? Does that match your expectations?

- Can you see any unusual patterns? What might explain them?

Visualizations can also reveal clusters, which suggest that subgroups exist in your data. To understand the subgroups, ask:

- How are the observations within each subgroup similar to each other?

- How are the observations in separate clusters different from each other?

- How can you explain or describe the clusters?

- Why might the appearance of clusters be misleading?

Some of these questions can be answered with the data while some will require domain expertise about the data. Many of them will prompt you to explore a relationship between variables, for example, to see if the values of one variable can explain the behavior of another variable. We’ll get to that shortly.

Unusual values

Outliers are observations that are unusual; data points that don’t seem to fit the pattern. Sometimes outliers are data entry errors, sometimes they are simply values at the extremes that happened to be observed in this data collection, and other times they suggest important new discoveries.

Outliers can be very hard to find in a histogram if you have a lot of data, so use other plots to expose outliers. You can either:

- Drop unu

- sual values from your dataset, or

- Replace them with NA

Covariation

If variation describes the behavior within a variable, covariation describes the behavior between variables. Covariation is the tendency for the values of two or more variables to vary together in a related way.

The best way to spot covariation is to visualize the relationship between two or more variables.

Remember you can have covariations between:

Categorical and numerical variables

Two categorical variables

Two numerical variables

Patterns

If a systematic relationship exists between two variables it will appear as a pattern in the data. If you spot a pattern, ask yourself:

Could this pattern be due to coincidence (i.e. random chance)?

How can you describe the relationship implied by the pattern?

How strong is the relationship implied by the pattern?

What other variables might affect the relationship?

Does the relationship change if you look at individual subgroups of the data?

Patterns in your data provide clues about relationships, i.e., they reveal covariation. If you think of variation as a phenomenon that creates uncertainty, covariation is a phenomenon that reduces it. If two variables covary, you can use the values of one variable to make better predictions about the values of the second. If the covariation is due to a causal relationship (a special case), then you can use the value of one variable to control the value of the second.

Models

Models are a tool for extracting patterns out of data.

For example, consider the diamonds data. It’s hard to understand the relationship between cut and price, because cut and carat, and carat and price are tightly related. It’s possible to use a model to remove the very strong relationship between price and carat so we can explore the subtleties that remain.

Plotting Systems

As there are many plotting systems, I’ll only cover Lattice, Base and ggplot2. I don’t use Lattice much so I’ll skim over it.

Lattice

Unlike the Base System, lattice plots are created with a single function call such as xyplot or bwplot. Margins and spacing are set automatically because the entire plot is specified at once. The lattice system is most useful for conditioning types of plots which display how y changes with x across levels of z. The variable z might be a categorical variable of your data. This system is also good for putting many plots on a screen at once. The lattice system has several disadvantages. First, it is sometimes awkward to specify an entire plot in a single function call. Annotating a plot may not be especially intuitive. Second, using panel functions and subscripts is somewhat difficult and requires preparation. Finally, you cannot “add” to the plot once it is created as you can with the base system.

table(state$region)Let’s use the lattice command xyplot to see how life expectancy varies with income in each of the four regions. First argument indicates we’re plotting life expectancy as it depends on income for each region. The second argument, data, is set equal to state.This allows us to use “Life.Exp” and “Income” in the formula instead of specifying the dataset state for each term (as in state$Income) Third argument layout is set equal to the two-long vector c(4,1)

xyplot(Life.Exp ~ Income | region, data=state, layout=c(4,1))The data is all plotted in one row. Try this

xyplot(Life.Exp ~ Income | region, data=state, layout=c(2,2))Ggplot2

It’s a hybrid of the base and lattice systems. It automatically deals with spacing, text, titles (as Lattice does) but also allows you to annotate by “adding” to a plot (as Base does), so it’s the best of both worlds. Although ggplot2 bears a superficial similarity to lattice, it’s generally easier and more intuitive to use. Its default mode makes many choices for you but you can still customize a lot. The package is based on a “grammar of graphics” (hence the gg in the name), so you can control the aesthetics of your plots.

head(mpg)qplot(displ, hwy, data=mpg)We’ll have several sections covering ggplot2 later.

Base

We’ll see plenty of examples of base i the next 2 sections.

We’ll start with EDA the basics using the built-in plot() visualization package in R and proceed from there.